Why not use velocity to compare teams?

So if you read my last post, you might be wondering, why not use velocity to compare teams? Some people do this, some people don’t. The agile software development community is pretty united in their recommendation here though. There are a number of problems with using velocity that way.

So why not use velocity to compare Scrum teams? A quick summary of the problems are:

- every team estimates in their own way

- we can’t normalise their estimates (easily)

- we can’t normalise story point estimates against objective items

- teams in agile practices work in different contexts

- teams should own and be responsible for their own contexts

- story points aren’t a measurement of time

- velocity in agile is easily gamed (and using velocity to compare teams encourages teams even more to game it, which ruins the whole point of it).

I will now run through these items in more detail. So you can understand why using velocity to compare teams is a bad idea.

Each team does their estimations in their own way

Each team estimates the relative points for a set of stories against other stories they have already seen. So team A looks at some stories and might say “that one is about in the middle so let’s call it a 3, those other ones are smaller so let’s call them 1 each, and that one is big so it is a 5”.

Then team B looks at some other stories and might say “that one is about in the middle so let’s call it an 8, those other ones are smaller so let’s call them 3 each, and that one is big so it is a 13”.

So team B’s estimates will be larger and their velocity therefore higher. Even though they’re not more “productive” or “efficient”. The velocity can’t be compared because they have different meanings for the different software development teams.

But can’t we normalize all our estimates?

No you can’t. Remember, these are relative sizes, not absolute. So if a team thinks a story is roughly five or six times bigger than another story, they would be right both in saying that the first story is a 1 and the second is 5, and equally correct in saying the first one is a 2 and the second one is a 13.

Velocity is a common metric in agile and scrum teams

What is important is that they are consistent with themselves over time; i.e. if another story comes along next sprint that is about the same size as the 1, then it is a 1 and not a 2.

A team can be inconsistent with themselves but not with another team. That’s because they are not making estimates against absolute standards, but their own view of the relative sizes between items.

If this relative estimation idea still seems strange. Then think about it this way.

You ask two groups of people to rank and describe the sizes of a golf ball, a tennis ball and a basketball. The first describes them as “small, medium, large”. The second describes them as “tiny, small, medium”. Which is right? Both of them. Because “small” is a relative word. A car is small compared to an airliner, but not compared to a bicycle.

Is the golf ball “tiny”? It is not if you compare it to a tennis ball. But it is if you compare it to a house.

But can’t we normalize story estimates against objective measures?

No you can’t. Story size estimates are relative by definition. If they’re not then you’re not doing story size estimation, you’re doing something else. Which of course you can if you want! But then you’re not doing velocity because velocity is a summation of relative story point estimates.

People keep trying to standardise this agile metrics stuff and it doesn’t work. Let me know if you find a way to make it work. But that still won’t solve the problem, because…

Teams operate in different contexts

Software teams are building different things. If two software teams using an agile methodology were both building exactly the same thing at exactly the same time, you would fire one of the teams, and probably some other people for allowing this to happen.

So even if you found some objective standards against which each team could estimate, the things they are estimating are different. And the contexts in which they are operating are likely to be different too.

One team might be building on a new architecture platform, the other might not. Another team might be refactoring some technical debt as they go, the other might not. One team might have a product owner who changes their mind every five minutes, the other might not. So team comparison isn’t really going to work.

You might be thinking “but that’s exactly it, I can use these drops in velocities to look for these problems and try and fix them!”. That’s true, but it’s missing the point, because…

It is the responsibility of the team to monitor and rectify problems

Velocity can be a useful agile metric for planning

The team performs its own introspection at the end of each sprint and looks for problems and how to fix them. This may include looking at recent dips in velocity. The team doesn’t need a manager breathing down their neck telling them to pick up their game. They should be able to do continuous improvement in agile by themselves.

They should know when their team performance is going well and when they haven’t. And their Scrum Master should help with this, if the team can’t see this. Or Product Owner if the Scrum Master can’t. A scrum agile retrospective is designed for these sorts of team performance discussions.

Finally, and most importantly, velocity is easily gamed

It is trivially easy to game this system. A team following agile principles can just pump up their estimates each sprint, which means their velocity increases each sprint. This is obviously ridiculous and makes the entire metric, and agile estimation process, worthless. I strongly believe, based on experience and anecdotal evidence, that teams will not game the system if you don’t give them any reasons to do so. So don’t give them any reasons to do so.

So what is velocity good for?

Velocity might not seem very useful. If you are working with multiple teams using an agile workflow, kanban boards etc. Each team can use it for their own sprint planning but that’s about it. And agile project management seems harder without it.

Should we just drop it from your agile development process and not do any estimation? Perhaps but perhaps not.

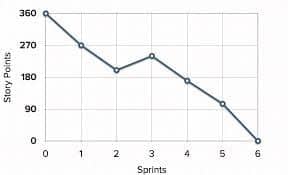

Burndown charts can be used to forward plan agile software development work

For now, assuming you are doing estimation, then velocity has really one use. A team can use their sprint velocity to estimate how much they can put in their next sprint, and how far they will get through a given backlog of work. Based on how far through that backlog they are, and the rate at which they are completing those backlog items. This is where your burndown chart would come into play.

That assumes that the backlog of work has either already been estimated, or an average story point estimate can and should be used (i.e. they haven’t left all the hard stuff for last). A team might also find velocity helpful to review in a retrospective to see how they went, but the team really should know that intuitively without looking at a velocity chart.

Why not use velocity to compare agile teams productivity

I hope you’ve found this interesting and convincing. I feel this is an open and shut case. For my agile coaching work, I always fight against using velocity to compare teams. Any agile transformation attempt should be careful about this dangerous practice.

Question time – have you ever been asked to compare the velocity of two or more teams to find the “underpeforming” ones? I’d like to read about it in the comments.

Comparing Scrum teams with velocity: Frequently asked questions

What is a good velocity for a Scrum team?

Since velocity is based on relative estimates, it is impossible to provide a “good” number or range for this agile metric. You should be looking at a number of metrics, and looking at changes over time.

What is the best metric to compare two agile teams

Comparing teams generally isn’t a good idea. Metrics should be used by a team to help inspect and understand their own performance. And to measure the effectiveness of continuous improvement opportunities over time.

Why velocity should not be used to compare two teams against each other?

For the reasons given above. To recap, that velocity is based on relative estimates, that teams do relative estimation differently, that there isn’t an objective baseline, that teams are working in different contexts.

If you’re still unsure what velocity is and how it works, this video does a good explanation of the topic.